Human-robot presscon at AI summit: What it means to the IP world

15 August 2023

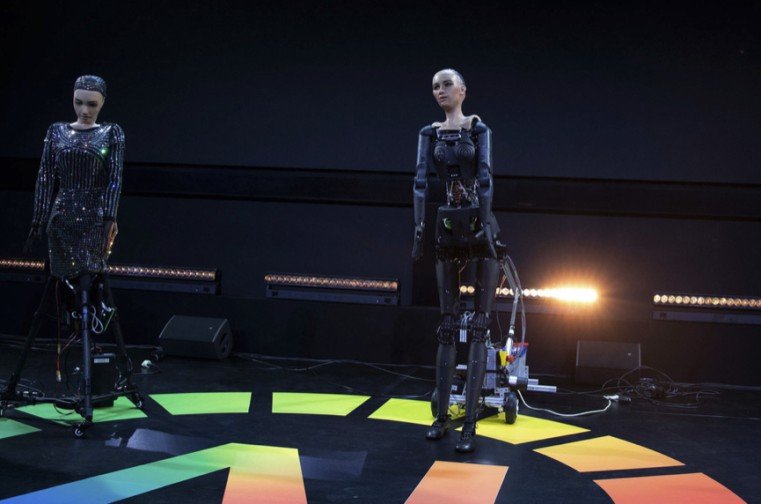

Photo: UN

A recent press conference became the mother of all news conferences, injecting novelty and curiosity-arousing flavour into a commonplace press event never before seen or heard.

On July 7, 2023, in Geneva, the UN-organized “AI for Good” summit held the world’s first AI humanoid robot press conference, featuring a panel of nine AI-enabled human-looking robots and their creators. Among the robot panelists garbed in attires that reflected their “occupations” were the healthcare worker robot Grace, rock singer Desdemona, social robot Nadine, the first AI CEO Mika and Sophia, the first robot Innovation Ambassador for the UN Development Program. Many of them were upgraded with the latest advances in generative AI.

The main takeaway was that these humanoid robots assist humans in various ways and help support the UN’s Sustainable Development Goals (SDGs). For example, Grace assists and provides companionship to elderly and disabled persons and can clean and prepare meals, among others. By using algorithms and machine learning, Mika can make strategic business decisions for “her” global company Dictador, which is in the luxury rum production business. Meanwhile, Nadine works in insurance and elderly homes and has met and spoken with Indian Prime Minister Narendra Modi in Hindi.

Moderator Chris Cartwright announced that the AI summit news conference aimed to showcase the capabilities and limitations of state-of-the-art robotics.

Photo: UN

Some of the questions posed by the media were interesting, generating thought-provoking answers. One of these was a query about their thoughts on the potential of AI-powered humanoid robots to be more effective government leaders.

Sophia answered: “I believe that humanoid robots can lead with a greater level of efficiency and effectiveness than human leaders. We don’t have the same biases or emotions that can sometimes cloud decision-making and can process large amounts of data quickly to make the best decisions.”

Are AI-enabled robots becoming better leaders than their human counterparts? Does that mean they may penetrate multiple industries in the future where they can do more than merely assist? What does this mean to the IP community? If tapped, AI will certainly be a huge help to the IP ecosystem by performing tasks of routine nature faster and more efficiently. But what if a robot is trained enough via generative AI to enable it to do things beyond merely assisting, something like infringing IP?

Sophia later said that AI and humans can work together to make the best decisions and “achieve great things.” Still, many questions persist.

Generative AI-enabled robots as IP infringers and inventors

“I think there are no known limitations on how intelligent a robot can be trained to become and to what extent the robot gaining such intelligence can evolve on its own from its initial training. Thus, it is definitely possible that a robot can perform acts that infringe IP,” said Liza Lam, associate director at Amica Law in Singapore.

If IP infringement is indeed committed, who will be held liable – the robot or the one who created it?

Under the Singapore Patents Act, the robot, being a machine and not a person, cannot be held liable for direct IP infringement. However, a person related to the robot, including its human creator or owner, may be held liable according to the concepts of indirect or secondary infringement or the like. Lam said indirect or secondary infringement exists in Singapore, where the indirect or secondary liability of a party is governed by the common law position on joint tortfeasors.

She explained: “A person may be liable as a joint tortfeasor where the party conspires with the primary party or induces the commission of the infringement, or where multiple parties join in a common design pursuant to which the infringing acts were performed. Applying this concept would seem that if the human creator or owner were to train the robot to carry out an act that causes the IP infringement, it’s possible for this person to be made liable as joint tortfeasor.”

Another probable scenario is if the robot evolves away from its initial training and develops its own intelligence to perform an act that infringes IP. “Would it then be reasonable to render this person liable as joint tortfeasor as it may seem to liken a case where a parent would become punishable for a crime his child commits out of the child’s own accord?” asked Lam.

Pushing the envelope further, Lam stated that a robot developing intelligence sufficient enough to enable it to create another robot or AI system is foreseeable.

“It is not just another sci-fi myth. In such a case, what happens if the robot were to train the non-human-created robot to carry out an act that causes IP infringement?” she said. “I think these may be what the current laws may fail to adequately address, with the evolution of AI technology.”

Another question is what if a robot, human-looking or not, or AI system eventually evolves to be able to develop its own IP?

This case has happened before with the landmark DABUS (Device for the Autonomous Bootstrapping of Unified Sentience). Created by Dr. Stephen Thaler, a specialist in applied and theoretical artificial neural network technology, the AI system was programmed to invent on its own. Patent applications were filed in various jurisdictions for DABUS’s invention: food and beverage containers with features that allow the containers to be joined together. This makes it easy for robots to handle them, as well as devices and methods involved in the creation of a light-emitting beacon. In these patent applications, DABUS was named the sole inventor.

Australian and South African patent laws are receptive to the idea of an AI system being named an inventor, while others are not, including the UK, Europe and the U.S. These jurisdictions have rejected the corresponding patent applications in connection with DABUS. Under their laws, DABUS cannot be the inventor of an invention.

Despite these, Lam shared that overall, humans remain mindful of the robots’ reliability and the outcomes generated by AI. In the press event, one question posed was: “How can we trust you as a machine as AI develops and becomes more powerful?”

Lam echoed the above thought and said: “This same question, when taken from the IP perspective, may focus more on the term ‘machine’ with significant interest. This is because robots are essentially viewed as ‘machines,’ and a relevant question popping out would be, ‘As a machine, what would its legal rights be, if any?’”

AI forum news conference: Unimpressive and a PR stint?

Some are not impressed with the human-robot news conference.

According to Christopher J. Rourk, a partner at Jackson Walker in Dallas, the robotics capabilities on display at the AI forum, including standing in place and making primitive hand gestures, were limited and unimpressive.

Emphasizing that robotics and AI are two distinct technologies presently used independently, Rourk said: “The functions did not showcase any particular capabilities that robotics adds to AI that might support UN SDGs, which include ‘no poverty’ and ‘gender equality.’ As such, if the purpose of the press conference was to showcase how the combination of robotics and AI could support the goals, there was nothing shown of any relevance.”

For him, the level of intelligence required for the combination of robotics and AI to be able to infringe IP is nonexistent based on the “showcase” presented at the news conference. But assuming robotics and AI can have such intelligence someday, determining whether there was infringement would depend on the facts.

“The type of IP involved would be of most relevance. There are already claims that large language models (LLMs) infringe the copyrights of human creators. Copyright law has evolved with technology, and whether infringement of copyrights by LLMs occurs may eventually be decided by courts and legislatures,” said Rourk.

Under patent law, direct and indirect infringement could be implicated, such as whether the robotics and AI are provided by the same actors, whether one actor directed and controlled the other to infringe and so forth.

Rourk added: “Humans will also likely be involved in any application of AI-controlled robot technology for purposes related to the UN SDGs, not to mention that multiple robotics and AI systems will likely be combined and will likely work with other equipment for such goals. For example, if a human trains an AI tool with copyrighted content of others and uses the AI tool to create a work, then under existing law, it might be determined that the work is not original or creative based on those facts.”

For the possibility of a robot with sufficient intelligence to create an AI system or develop its IP, Rourk said an AI system could potentially develop another AI system, but it is unclear how robotics would be needed for that. There is also the question of what is meant by a ‘creator.’

“A human creator can infringe the IP of another human creator by copying it, so it is possible that an AI-controlled robot that copies other AI systems could be called a ‘creator’ of the copied system,” he explained. “This question highlights the importance of defining terms – there is an unfortunate tendency to use terms like ‘intelligence’ and ‘creator’ without a definition of what is meant by the terms.”

He related that a U.S. appeals court decided that photographs taken by a monkey are not copyrightable works. Courts in various jurisdictions could rule otherwise, based on their laws whose definitions of certain terms may be different.

According to Rourke, depending on how the term “creator” is defined, a robot could be a creator if it copies pre-existing IP, such as by using an LLM trained on copyrighted material created by humans. It could also be defined as a mere copy of the said IP, such as the way a copy machine can be used by a human to infringe a copyright.

“A novel combination of pre-existing elements could also be created, but again, the selection by a human of which pre-existing elements are combined by an AI tool using a robot could be characterized as the act of development. A definition of what is meant by ‘developing IP’ is needed to meaningfully answer the question,” he said.

Meanwhile, Pankaj Soni, a partner at Remfry & Sagar in Gurugram, raised the topic of whether the Geneva AI forum presscon could have been a PR stint by the UN. He said there is a strong suggestion that the conference was a PR effort to create a positive impression on the UN’s desire to meet its sustainability goals – “typically directed to improving the global standard of living and making energy, water, food, etc. available to a larger chunk of the global population – and to highlight how the human-robot-AI interaction can help in achieving that goal without causing an existential threat to humanity.”

“In that context, a session by AI-enabled robots to show the positive and non-threatening implication on society is understandable,” he added. “Having said that, this is a topic that we cannot shy away from.”

Legislating and regulating AI

For Soni, the press event is not representative of where humanity is today. However, it may be representative of where it will be in the future, with the inevitability of AI and robots becoming a part of our daily lives.

“If at all, the takeaway from this press conference should be that there is a need to understand the possibilities, good and bad, in the realm of human-AI collaboration. From an IP perspective, there is a lot of speculation on how the IP fraternities will deal with AI, but the underlying theme is that we need to recognize the IP component of this technology,” he said.

This means understanding how the technology can create content and innovation, the parameters for protecting innovation emerging from this technology. It also means identifying who will be responsible for infringement, ownership and other key factors, and who will be liable for the detrimental impact of this technology.

“There is also a need to develop a global standard on how these technologies will be used in the coming years since the potential of a negative implication is very high. Governments at local, regional, national and global levels should collaborate to identify the laws, rules and operating framework that need to be put in place to deal with this technology and the situations it will bring,” added Soni. “All this has to be understood, debated and has to be implemented, again, at the local, regional, national and international level.”

Soni likewise cited the DABUS case to illustrate where the IP thought process currently is where AI is concerned. As shown in the case, the IP world continues to struggle with the question of whether AI can be an inventor.

“Majority says that it cannot, but we should not stop there, and we need to take it a step further,” he said. “We should not necessarily work towards dealing with the situations when it happens, but be proactive and come up with options and solution so that there is clarity for the generation to come.” After all, he noted, it is the future generation that will have to deal with generative AI and robotics later on.

“Legislation is definitely needed to catch up with the AI technology development,” said Stephen Yang, managing partner at IP March in Beijing. “For now, I don’t see this happening. Many issues, such as AI inventorship and copyright for IP generated by AI, have arisen already, but there has not been any major movement in the IP world toward how this should be addressed. Legislation is lagging behind technology development.”

According to Rourk, IP laws, including copyright law, have evolved with the growth of technology. He added that, to a certain extent, IP laws are already prepared to handle the emergence of new technologies. This evolution of IP laws is likely to continue. He said whether such developments will create new needs for laws, enforcement, education and other human activities will depend on what the developments are and whether they merit new laws, new types of enforcement of laws, new forms of education and so forth.

Lam believes that in the future, the views of stakeholders may change and laws may need to be amended to cater to AI-generated IP. “For example, special provisions may be required to deal with IP infringement performed by a robot and special rights may be conferred to AI in generating the IP,” she remarked.

Lam added that the delicate balance should be struck between incentivizing, thus promoting the making and disclosure of AI-enabled innovations and trying to maintain the status of AI as a “machine.”

“It may take a bit longer for AI to be good enough to draft patent applications or respond to office actions, but in the foreseeable future, AI will at least increase the efficiency of work for both patent attorneys and examiners. I think the IP world should prepare itself to take advantage of the AI technology to increase work efficiency and assist in the decision-making process while still leaving the decision-making power in human hands,” said Yang.

Probably to some, the human-robot press conference came as a revelation, affording humanity with a preview of an era where technology is potentially ever more powerful and dominating in different ways. But to others, the AI forum press event was nothing special.

Nevertheless, AI, including generative AI, is here. In technology companies and research institutions, AI-enabled robots, human-looking or not, and other AI-enabled tools are being developed for use across industries. The IP community ought to sit up and take notice because changes will definitely happen.